How to mitigate AI-driven power concentration

I. FLI launching new grants to oppose and mitigate AI-driven power concentration

AI development is on course to concentrate power within a small number of groups, organizations, corporations, and individuals. Whether this entails the hoarding of resources, media control, or political authority, such concentration would be disastrous for everyone. We risk governments tyrannising with Orwellian surveillance, corporate monopolies crushing economic freedom, and rampant decision automation subverting meaningful individual agency. To combat these threats, FLI is launching a new grants program of up to $4M to support projects that work to mitigate the dangers of AI-driven power concentration and move towards a better world of meaningful human agency.

II. FLI’s position on power concentration

The ungoverned acceleration of AI development is on course to concentrate further the bulk of power amongst a very small number of organizations, corporations, and individuals. This would be disastrous for everyone.

Power here could mean several things. It could mean the ownership of a decisive proportion of the world’s financial, labor or material resources, or at least the ability to exploit them. It could be control of public attention, media narratives, or the algorithms that decide what information we receive. It could simply be a firm grip on political authority. Historically, power has entailed some combination of all three. A world where the transformative capabilities of AI are rolled out unfairly or unwisely will likely see most if not all power centres seized, clustered and kept in ever fewer hands.

Such concentration poses numerous risks. Governments could weaponize Orwellian levels of surveillance and societal control, using advanced AI to supercharge social media discourse manipulation. Truth decay would be locked in and democracy, or any other meaningful public participation in government, would collapse. Alternatively, giant AI corporations could become stifling monopolies with powers surpassing elected governments. Entire industries and large populations would increasingly depend on a tiny group of companies – with no satisfactory guarantees that benefits will be shared by all. In both scenarios, AI would secure cross-domain power within a specific group and render most people economically irrelevant and politically impotent. There would be no going back. Another scenario would leave no human in charge at all. AI powerful enough to command large parts of the political, social, and financial economy is also powerful enough to do so on its own. Uncontrolled artificial superintelligences could rapidly take over existing systems, and then continue amassing power and resources to achieve their objectives at the expense of human wellbeing and control, quickly bringing about our near-total disempowerment or even our extinction.

What world would we prefer to see?

We must reimagine our institutions, incentive structures, and technology development trajectory to ensure that AI is developed safely, to empower humanity, and to solve the most pressing problems of our time. AI has the potential to unlock an era of unprecendented human agency, innovation, and novel methods of cooperation. Combatting the concentration of power requires us to envision alternatives and viable pathways to get there.

Open source of AI models is sometimes hailed as a panacea. The truth is more nuanced: today’s leading technology companies have grown and aggregated massive amounts of power, even before generative AI, despite most core technology products having open source alternatives. Further, the benefits of “open” efforts often still favor entitities with the most resources. Hence, open source may be a tool for making some companies less dependent upon others, but it is insufficient to mitigate the continued concentration of power or meaningfully help to put power into the hands of the general populace.

III. Topical focus:

Projects will fit this call if they address power concentration and are broadly consistent with the vision put forth above. Possible topics include but are not limited to:

- “Public AI”, in which AI is developed and deployed outside of the standard corporate mode, with greater public control and accountability – how it could work, an evaluation of different approaches, specifications for a particular public AI system;

- AI assistants loyal to individuals as a counterweight to corporate power – design specifications for such systems, and how to make them available;

- Safe decentralization: how to de-centralize governance of AI systems and still prevent proliferation of high-risk systems;

- Effectiveness of open-source: when has open-source mitigated vs. increased power concentration and how could it do so (or not) with AI systems;

- Responsible and safe open-release: technical and social schemes for open release that take safety concerns very seriously;

- Income redistribution: exploring agency in a world of unvalued labour, and redistribution beyond taxation;

- Incentive design: how to set up structures that incentivise benefit distribution rather than profit maximisation, learning from (the failure to constrain) previous large industries with negative social effects, such as the fossil fuel industry;

- How to equip our societies with the infrastructure, resources and knowledge to convert AI insights into products that meet true human needs;

- How to align economic, sociocultural and governance forces to ensure powerful AI is used to innovate, solve problems, increase prosperity broadly;

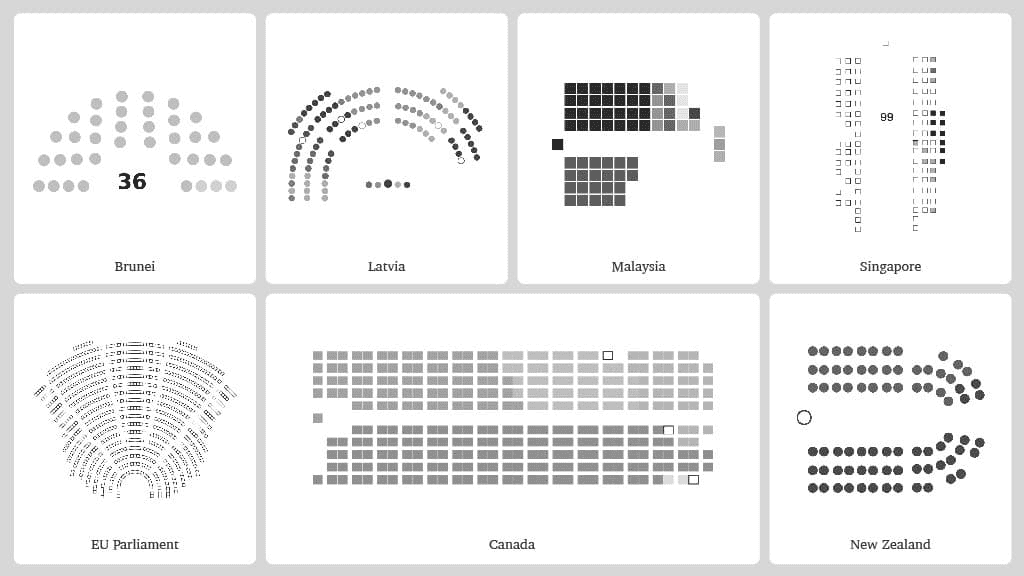

- Preference aggregation: New mechanisms for discerning public preferences on social issues, beyond traditional democratic models;

- Legal remedies: how to enable effective legal action against possible absuses of power in the AI sector;

- Meta: Projects that address the issue of scaling small pilot projects to break through and achieve impact;

- Meta: Mechanisms to incentivize adoption of decentralized tools to achieve a societally significant critical mass.

Examples of directions that would probably not make compelling proposals:

- Projects that are implicitly or explicitly dismissive of (a) the rapid and real growth in AI capability, or (b) the need for advanced AI systems to be safe, or (c) technical or scientific realities such as vulnerabilities of AI systems to jailbreak or guardrail removal.

- Projects that simply double-down on existing anti-concentration mechanisms or processes, rather than innovating approaches addressing the issues created by AI in particular.

- Projects that equate “democratization” with just making particular AI capabilities widely available.

- Projects likely to transfer power to AI systems themselves rather than people (even if decentralized).

- Projects that aren’t focused on AI as either a driver of the problem or of solutions.

IV. Evaluation Criteria & Project Eligibility

Grants totaling between $1-4M will be available to recipients in non-profit institutions, civil society organizations, and academics for projects of up to three years duration. Future grantmaking endeavors may be available to the charitable domains of for-profit companies. The number of grants bestowed is dependent on the number of promising applications. These applications will be subject to a competitive process of external and confidential expert peer review. Renewal funding is possible and contingent on submitting timely reports demonstrating satisfactory progress.

Proposals will be evaluated according to their relevance and expected impact.

The recipients could choose to allocate the funding in myriad ways, including:

- Creating a specific tool to be scaled up at a later date;

- Coordinating a group of actors to tackle a set problem;

- Technical research reports on new systems;

- Policy research;

- General operating support for existing organizations doing work in this space;

- Funding for specific new initiatives or even new organizations.

V. Application Process

Applicants will submit a project proposal per the criteria below. Applications will be accepted on a rolling basis and reviewed in one of two rounds. The first round of review for projects will begin on July 30, 2024 and the second round of review will be on September 15, 2024.

Project Proposal:

- Contact information of the applicant and organization

- Name of tax-exempt entity to receive the grant and evidence of tax-exempt status

- If you are not part of an academic or non-profit organisation, you may need to find a fiscal sponsor to receive the grant. We can provide suggestions for you. See ‘Who is eligible to apply?’ in the FAQs.

- A project summary not exceeding 200 words, explaining the work

- An impact statement not exceeding 200 words detailing the project’s anticipated impact on the problem of AI-enabled power concentration

- A statement on track record, not exceeding 200 words, explaining previous work, research, and qualifications relevant to the proposed project

- A detailed description of the proposed project. The proposal should be at most 8 single-spaced pages, using 12-point Times Roman font or equivalent, including figures and captions, but not including a reference list, which should be appended, with no length limit. Larger financial requests are likely to require more detail.

- A detailed budget over the life of the award. We anticipate funding projects in the $100-500k range. The budget must include justification and utilization distribution (drafted by or reviewed by the applicant’s institution’s grant officer or equivalent). Please make sure your budget includes administrative overhead if needed by your institute (15% is the maximum allowable overhead; see below).

- Curricula Vitae for all project senior personnel

Project Proposals will undergo a competitive process of external and confidential expert peer review, evaluated according to the criteria described above. A review panel will be convened to produce a final rank ordering of the proposals, and make budgetary adjustments if necessary. Awards will be granted and announced after each review period.

VI. Background on FLI

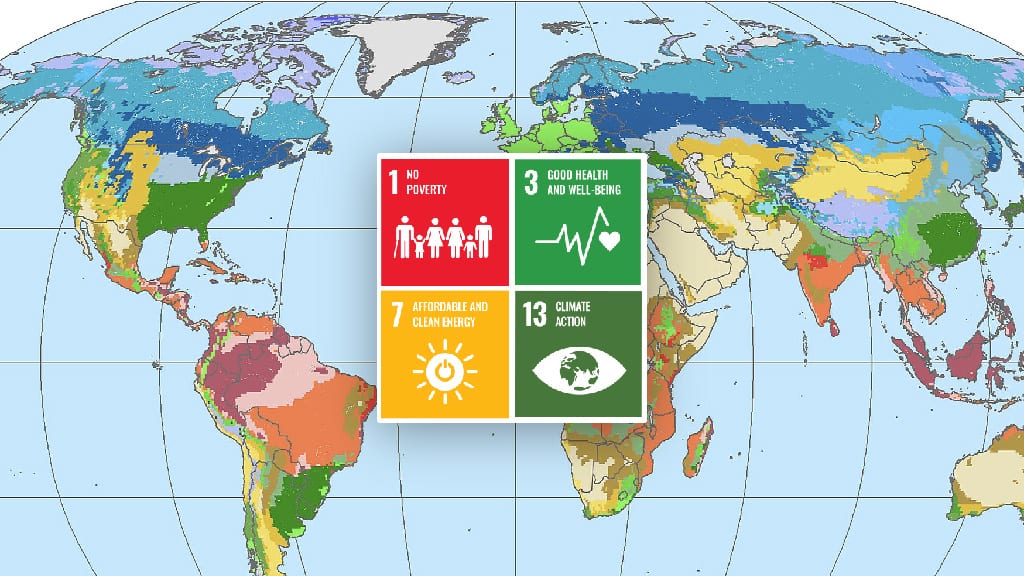

The Future of Life Institute (FLI) is an independent non-profit, established in 2014, that works to steer transformative technology towards benefiting life and away from extreme large-scale risks. FLI presently focuses on issues of advanced artificial intelligence, militarized AI, nuclear war, bio-risk, biodiversity preservation and new pro-social platforms. The present request for proposals is part of FLI’s Futures Program, alongside our recent grants for realising aspirational futures through the SDGs and AI governance.

FAQ

Who is eligible to apply?

Individuals, groups or entitites working in academic and other non-profit institutions are eligible. Grant awards are sent to the applicant’s institution, and the institution’s administration is responsible for disbursing the awards. Specifically at universities, when submitting your application, please make sure to list the appropriate grant administrator that we should contact at your institution.

If you are not affiliated with a non-profit institution, there are many organizations that can help administer your grant. If you need suggestions, please contact FLI.

Can international applicants apply?

Yes, applications are welcomed from any country. If a grant to an international organization is approved, to proceed with payment we will seek to evaluate equivalency determination. Your institution will be responsible for furnishing any of the requested information during the due diligence process. Our grants manager will work with selected applicants on the details.

Can I submit an application in a language other than English?

All proposals must be in English. Since our grant program has an international focus, we will not penalize applications by people who do not speak English as their first language. We will encourage the review panel to be accommodating of language differences when reviewing applications.

What is the overhead rate?

The highest allowed overhead rate is 15%.

How will payments be made?

FLI may make the grant directly, or utilize one of its donor advised funds or other funding partners. Though FLI will make the grant recommendation, the ultimate grantor will be the institution where our donor advised fund is held. They will conduct their own due diligence and your institution is responsible for furnishing any requested information. Our grants manager can work with selected applicants on the details.

Will you approve multi-year grants?

Multi-year grant applications are welcome, though your institution will not receive an award letter for multiple years of support. We may express interest in supporting a multi-year project, but we will issue annual, renewable, award letters and payments. Brief interim reports are necessary to proceed with the next planned installment.

How many grants will you make?

We anticipate awarding between $1-4mn in grants, however the actual total and number of grants will depend of the quality of the applications.

Our other grant programs